N Jobs In Random Forest

Random forests is great with high dimensional data since we are working with subsets of data. Timeittimeit test_funcnumber100 If n_jobs 1.

Dark Forest Dark Forest Aesthetic Dark Forest Forest Photography

Returns 13 seconds.

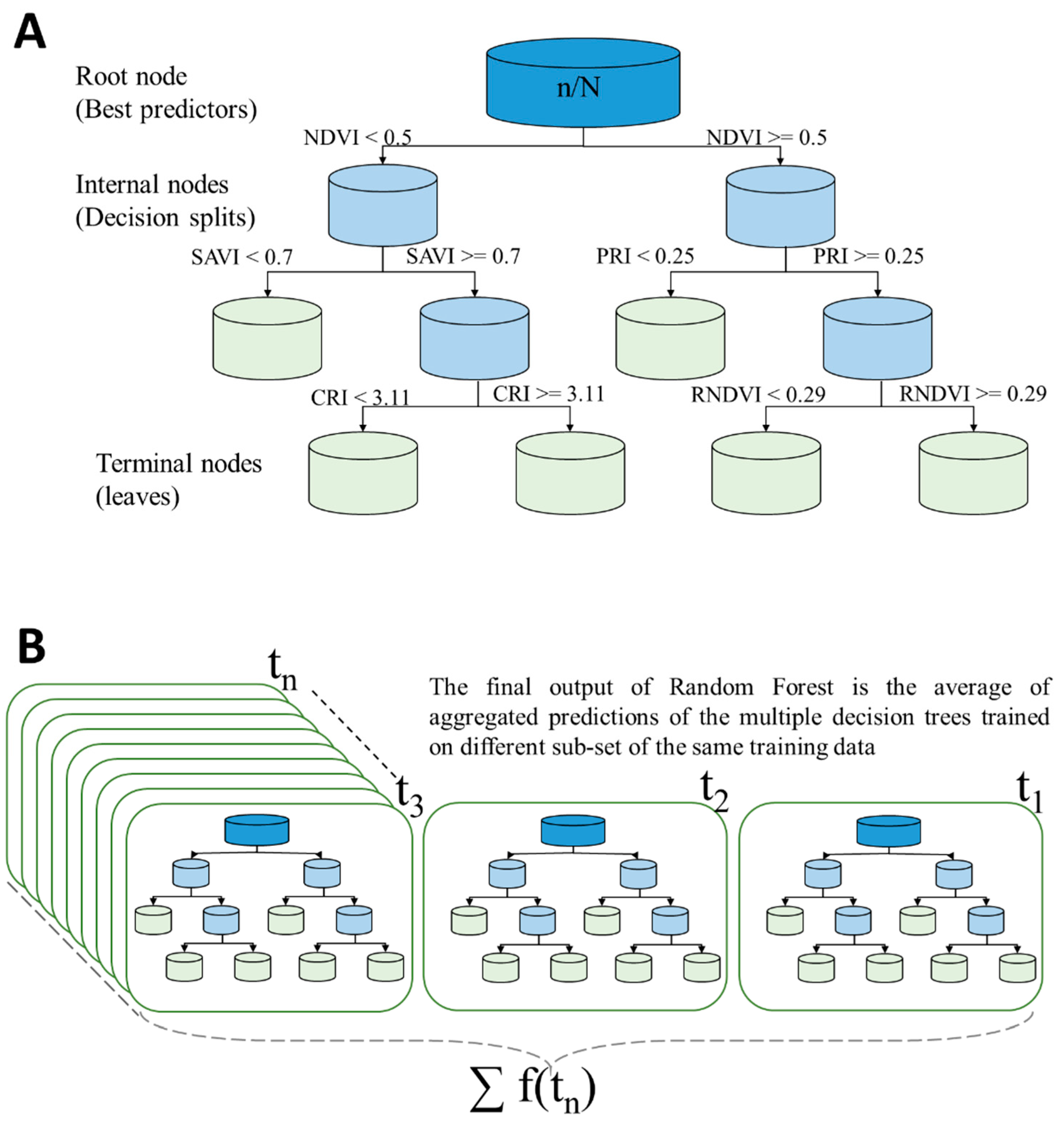

N jobs in random forest. If n_jobs -1 or any value 2. If 1 is given no joblib parallelism is used at all which is useful for debugging. A random forest is a meta estimator that fits a number of classifical decision trees on various sub-samples of the dataset and use averaging to improve the predictive accuracy and control over-fitting.

Max_leaf_nodesNone bootstrapTrue oob_scoreFalse n_jobs1 random_stateNone verbose0 warm_startFalse. Model ExtraTreesClassifierclass_weightbalanced n_jobs8 parameters criterion. Random forest selects subset of features say 2sqrt 5000 141 words for each split.

A random forest classifier. A popular example is the ensemble of decision trees such as bagged decision trees random forest and gradient boosting. The number of trees your want to build within a Random Forest before aggregating the predictions.

Deep decision trees may suffer from overfitting but random forests prevents overfitting by creating trees on random subsets. In most estimators on scikit-learn there is an n_jobs parameter in fitpredict methods for creating parallel jobs using joblib. A random forest is a meta estimator that fits a number of classifying decision trees on various sub-samples of the dataset and use averaging to improve the predictive accuracy and control over-fitting.

Word frequency is used as feature value could be also TF-IDF. N_jobs is an integer specifying the maximum number of concurrently running workers. Random Forests vs Decision Trees.

Decision trees are computationally faster. For n_jobs below -1 n_cpus 1 n_jobs are used. See Glossary for more details.

This is quite different from setting it to some positive integer 1 which creates multiple Python processes at 100 usage. If n_jobs1 it uses one processor. N_jobs is the number of jobs to run in parallel.

This dictates how many decision trees should be built. Just for computational efficiency oob_score n_estimators random_state warm_start and n_jobs are just a few that comes to mind. If -1 then the number of jobs is set to the number of cores.

-1 means using all processors. If -1 then the number of jobs is set to the number of cores. In this article I will be focusing on the Random Forest Regression modelif you want a practical guide to get started with machine learning refer to this article.

In the case of None the list has no capped length. Random_state makes the output replicable. Many machine learning algorithms support multi-core training via an n_jobs argument when the model is defined.

The dataset comprises of details of customers to whom a bank has sold a credit card. 5000 distinct words in training set after stemming and removal of stop words. First off I will explain in simple terms for all the newbies out there how Random Forests work and then move on to a simple implementation of a Random Forest Regression model.

If set to -1 all CPUs are used. For example with n_jobs-2. Returns res OptimizeResult scipy object.

If n_jobs-1 then the number of jobs is set to the number of cores available. As Random Forests use many instances of DecisionTreeClassifier they share many of the same parameters such as the criterion Gini Impurity or Entropyinformation gain max_features and min_samples_split. A random forest regressor.

Random Forest Model in R The Dataset. This can be a blessing and a curse depending on what you want. Text to classify is short eg.

Model_queue_size int or None default. This affects not just the training of the model but also the use of the model when making predictions. A higher value will take longer to.

4 10 20 min_samples_split. The number of jobs to run in parallel. Suppose i have following setup.

Random forests is a set of multiple decision trees. Python random-forest scikit-learn model-evaluation. 10 words in average.

The code is running on windows 10 64 bits on Intel i7 4770K processor or Ryzen r7 1700 with the same problem. N_estimators - integer - Default10. Random forests is difficult to interpret while a decision tree is easily interpretable and can be converted to rules.

But random forest offers lots of parameters to tweak and improve your machine learning model. CART used as a tree model. 2 4 8 max_depth.

Great with High dimensionality. 3 10 20 clf GridSearchCVmodel parameters verbose3 scoringroc_auc cvStratifiedKFoldy_train n_folds5 shuffleTrue n_jobs1 clffitX_trainvalues y_trainvalues. The link to the dataset is provided at the end of the blog.

Specifically in Python to run this in multiple machines provide the parameter n_jobs -1 The -1 is an indication to use all available machines. The number of jobs to run in parallel for fit and predict. Oob_scoreFalse n_jobs1 random_stateNone verbose0.

Fit predict decision_path and apply are all parallelized over the trees. Integer optional default1 The number of jobs to run in parallel for both fit and predict. There are some new parameters that are used in the ensemble process.

Training the Random Forest model with more than one core is obviously more performant than on a single core. Oob_score random forest cross validation method. I noticed that setting it to -1 creates just 1 Python process and maxes out the cores causing CPU usage to hit 2500 on top.

See scikit-learn documentation for further details. None means 1 unless in a joblibparallel_backend context. Keeps list of models only as long as the argument given.

N_jobs how many processors the model can use.

Ancient Forest Trees Giant Roots Of Tropical Rubber Trees Sponsored Trees Forest Ancient Giant Rubber Ad Ancient Forest Tree Ancient

Job Hazard Analysis Infographic Easy As 1 2 3 4 Workplace Safety Hazard Analysis Occupational Health And Safety

Free Fake Auto Insurance Card Template New Sustainability Free Full Text A Random Forest Model For Best Templa Special Education Best Templates Card Template

Li Mayleen In 2021 Fantasy Landscape Mystical Forest Dark Green Aesthetic

Pin By Shannon N On Black Misty Forest Fantasy Landscape Dark Forest

3 Reasons To Use Random Forest Over A Neural Network Comparing Machine Learning Versus Deep Learning By James Montantes Towards Data Science

Fantasy Book Title Generator By Https Www Deviantart Com Randomvangloboii On Deviantart Book Title Generator Writing Dialogue Prompts Writing Words

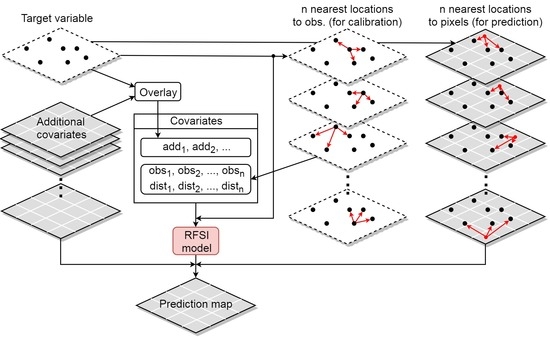

Remote Sensing Free Full Text Random Forest Spatial Interpolation Html

Statistical Learning Theory By Vladimir N Vapnik Https Www Amazon Com Dp 0471030031 Ref Cm Sw R Pi Learning Theory Computer Science Programming Data Science

Encyclopedia Of Machine Learning And Data Mining Springer Https Www Amazon Com Dp 14899 Data Science Learning Machine Learning Machine Learning Deep Learning

Random Forest Classifier And Its Hyperparameters By Ankit Chauhan Analytics Vidhya Medium

Remote Sensing Free Full Text A Random Forest Machine Learning Approach For The Retrieval Of Leaf Chlorophyll Content In Wheat Html

Print Is The Way To Go People Save Mother Earth Infographic Print

Posting Komentar untuk "N Jobs In Random Forest"